NIPS 2016

I have just returned from NIPS (technically, I am still continuing travelling in Canary Islands). Surprisingly, this is my first NIPS. I have enjoyed presentations of great work, as well as communicating with machine-learning folks and friends. Here is an edited high-level write-up I prepared for my co-workers at BlippAR, hence the focus on computer vision and deep learning. The scope of the conference is of course much broader.

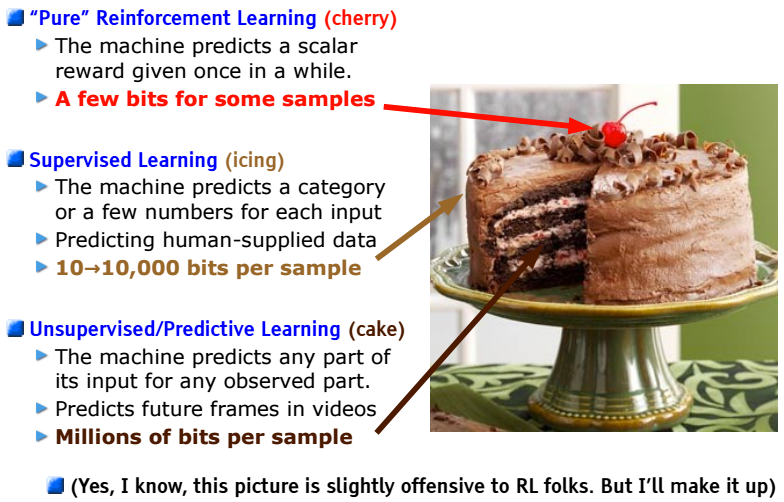

In the beginning of the conference, Yann LeCun gave a keynote, which may be considered a program speech for the whole conference. Besides starting the layered cake meme, he mentioned two ideas that he considers brightest in the last decade: adversarial training and external memory. My perception is that the most frequent buzzwords at the conference are:

- generative adversarial networks (GAN),

- recurrent neural networks (RNN),

- reinforcement learning (RL),

- robotics (done with or without RL).

|

| Great engineers are good at defining useful abstractions. This LeCun’s layered cake metaphor was heavily re-used throughout the conference. |

Andrew Ng gave a keynote on the workflow of applied DL research. Most ideas are trivial, although are often neglected. Here are a few of them. The most important metrics to track are: human error, training set error, and validation error. The gap between human and training set error is the bias. It can be solved by a bigger model or longer training. The validation/training gap is due to the variance. Once the bias problem is addressed, you can add more data or increase regularisation to reduce variance.

In practice, however, we have a different distribution of test data than of the training data. In that case, you might want to have two validation sets: one coming from training distribution, and another from test distribution. If you don’t have the latter, you will be solving a wrong problem. Domain adaptation methods may be also helpful, but are not quite practical at the moment.

He also heavily advocates for using the centralised data storage infrastructure that is easily available to all team members. It speeds up the progress a lot.

Currently, supervised learning dominates the landscape, while unsupervised and reinforcement learning are around for some time, but still cannot take off. Ng predicts that the second-biggest areas (after SL) will be not one of those, but transfer learning and multi-task learning. Those are important practical areas almost ignored by academic researchers.

There were seemingly few papers on architectural design (for supervised learning). Daniel Sedra gave a great talk on Neural networks of stochastic depth. The idea is to drop entire layers during training in ResNet-like architectures. That allows to grow them up to 1000+ layers, which gives significant improvement in accuracy on CIFAR (and not quite significant on ImageNet). Unfortunately, at test-time layers are there, so the inference time may be a problem. Another improvement is to add identity connection between each pair of layers, which also helps a bit.

Michael Figurnov, my academic sibling, presented a work on speeding up inference using so-called perforated convolutions. The idea is to skip some multiplications that do not impact the result of the operation a lot. In the follow-up work, he and co-authors suggest to skip the whole parts of the computation graph once they are estimated to be unimportant. This can potentially lead to significant speed-up, but for most practitioners it is the question of support in libraries such as CUDNN. Hopefully, we’ll be able to use it soon.

|

| The method suggest not to waste computation time on background, but evaluate more ResNet layers where it can pay out more. |

There was a demo I missed that demonstrated real-time object detection on a mobile device (if you have a link, please share with me!). It is based on YOLO, and the idea is to pre-compute and memoize some parts of the computational graph, then at inference time find the best approximation. There is some loss in accuracy, but real-time inference on the board looks impressive!

The most interesting improvement for deep learning optimisation seems to be Weight normalisation. The paper builds on the idea of batch normalisation. It tries to explain the positive effect of it (i.e. staying on the unit sphere) and uses this intuition to propose an alternative way to stabilise the training process.

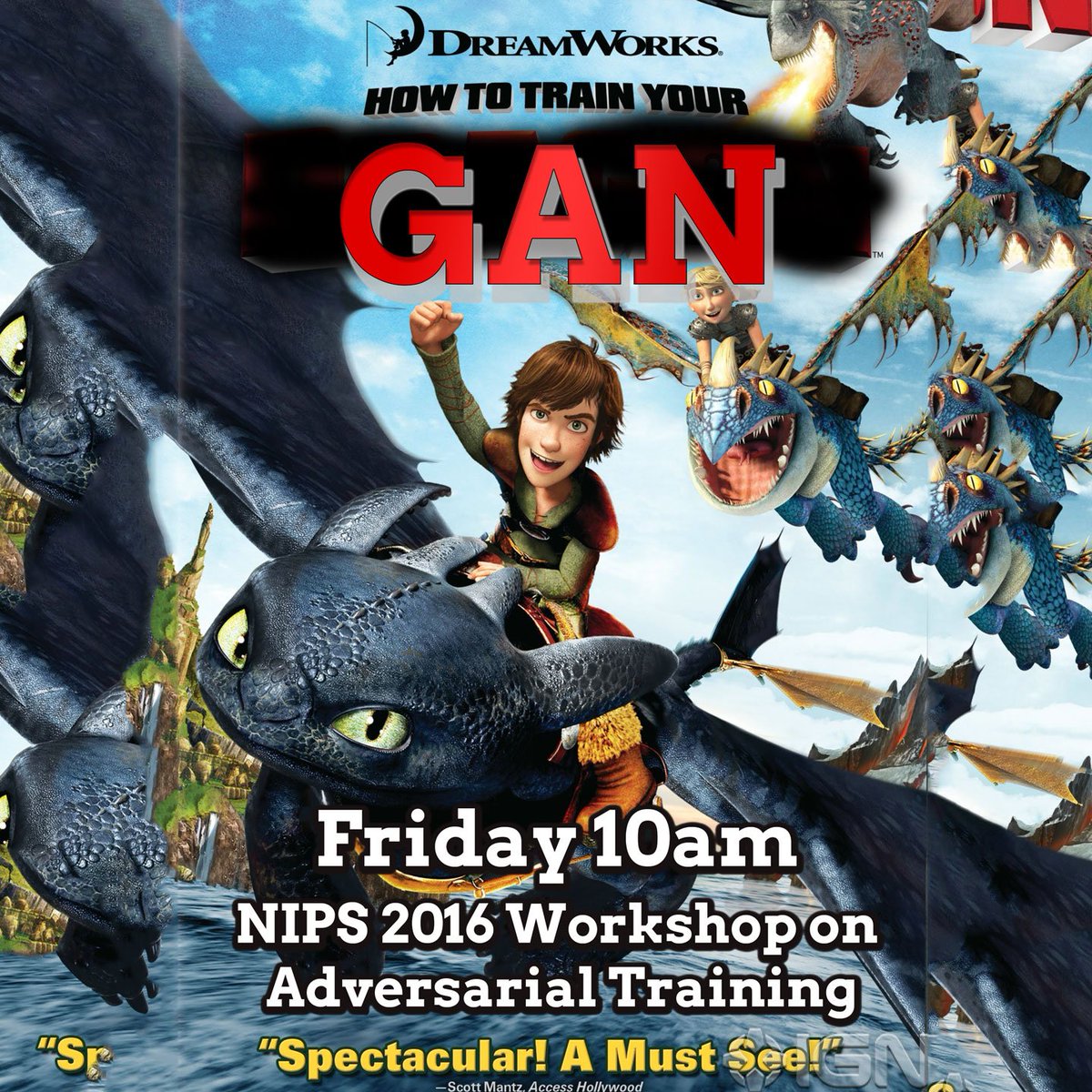

As I said above, GANs are a big (and controversial) topic here. It seems to be the most popular generative model for images, although variational autoencoders are still around. The training process is quite unstable, but the community is increasingly finding the ways to stabilise it. Most of them are hacks born in trial-and-error process. Soumith Chintala presented a list of 15 tricks in his talk How to train GAN? He promised to publish the slides later, but here is the writeup. If you will have to train GANs, make sure you read that first. There is no way you can guess this combination yourself (and papers typically do not mention all those hacks).

|

| Poster by Soumith. TL;DR: use magic. |

In the visual search field, there is a Universal correspondence network paper. Instead of matching features, it suggests to run one pass through the network to find dense correspondences. They claim that in comparison to extracting deep features for patches, the number of distance computations gets down from quadratic to linear (although, spatial index can also mitigate the issue). It appears to learn some notion of geometry/topology, i.e. one can train it to do rigid matching, as well as to look more at semantic similarity. The results they showed were on very similar images, so did not impress me much. Although it may had been done only to ease the visualisation.

|

| Deep learning continues to disrupt traditional computer-vision pipelines. |

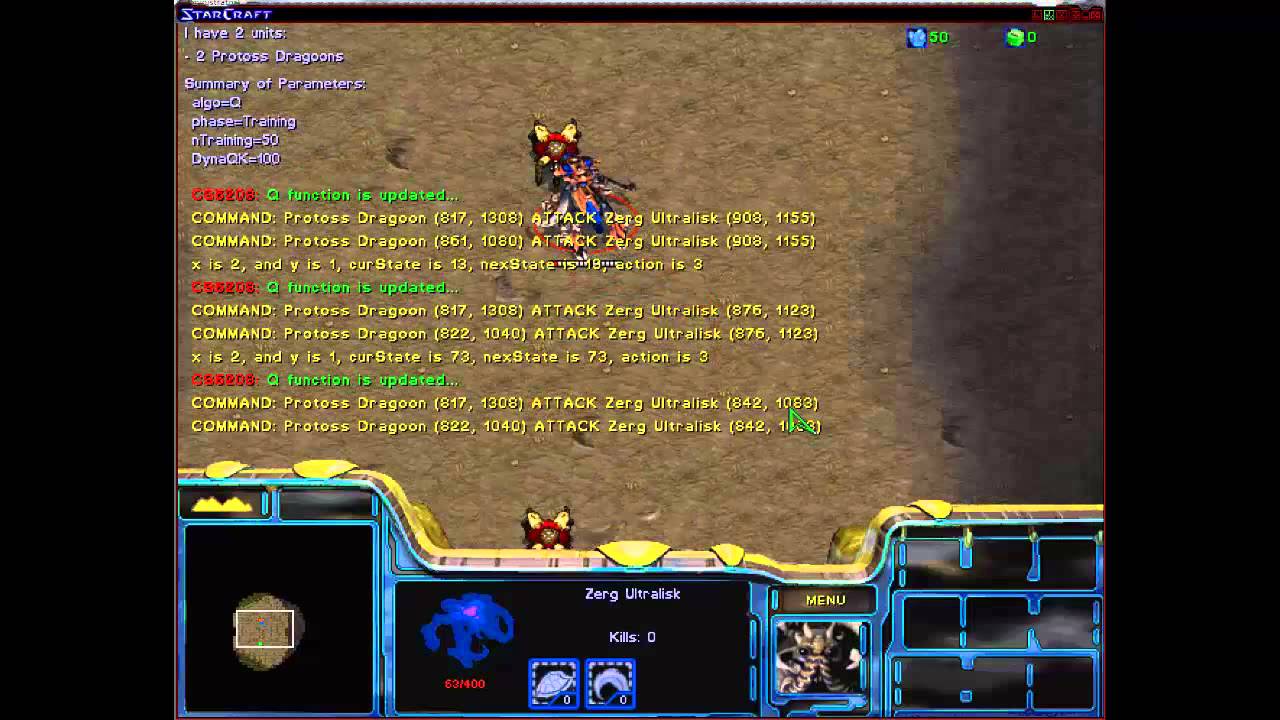

For reinforcement learning, there is no single paper that I can pick as brightest, but the most impact seems to be done by publishing OpenAI Universe and DeepMind Lab, both are training environments for reinforcement learning algorithms stemming from computer-generated imagery (i.e. video games). It seems to be a valid step towards building intelligent agents operating in the real world, although there is a scepticism that it will be difficult to make the final step: the methods are data-hungry, and it is difficult to get a lot of data from anything rather than simulated environment, let alone having a frequent reward signal. This is one of the reasons researchers study the possibility of imitation learning, where a robot learns the cost function from demonstrations rather than taking it for granted. With this approach, we may be able to teach robots to perform tasks like pouring water into a glass by having a person or another robot showing it how to do it.

|

| DeepMind collaborated with Blizzard to provide StarCraft API to facilitate training of RL agents. Now you have an excuse to play games at work. |

Bayesian methods seem to be quite popular, and apparently can be successfully combined with neural networks (variational autoencoders and their variants are used a lot). Workshops on approximate variational inference and Bayesian deep learning have seen a large number of papers. Tutorial on variational inference (slides-pdf-1, slides-pds-2) reflected a significant progress in the recent years: things like reparametrisation trick and stochastic optimisation made possible to perform estimation of quite sophisticated posterior distributions.

Surely, an important part of the conference is socialisation. So thanks to everybody I had a chance to meet at the conference and had a productive (or just fun) conversation with. See you at the future conferences!